Compression (Encoder)#

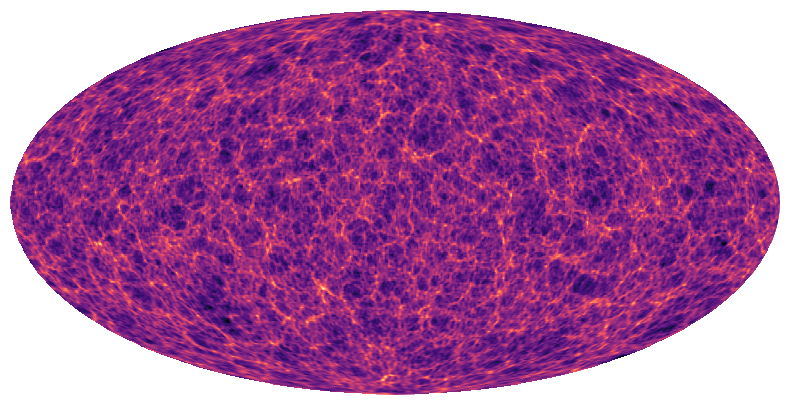

This tutorial demonstrates how to compress a spherical image \(x\) into a (relatively) small number of scattering covariance coefficients which, unlike other popular statistics, encode complex non-Gaussian structural information such as filaments.

Defining the scattering covariance operation as \(\Phi\) we will consider two basic variants: anisotropic coefficients \(\Phi^a(x)\) and isotropic coefficients \(\Phi^i(x)\). Anisotropic coefficients are more expressive but higher dimensionality, whereas isotropic coefficients are less expressive but much lower dimensionality.

So what’s the difference?#

At highest order, anisotropic coefficients encode covariant information between 3 different wavelet scales \(j\) and directions \(\gamma\). On the other hand, isotropic coefficients average over directions \(\gamma\), sampling the mean covariance structure across scales. Note that isotropic coefficients will still capture directional filamentary structure but will be somewhat less expressive.

What’s the latent dimensionality?#

In this code we restrict ourselves to dyadic wavelets. The maximum number of wavelet scales is determined by the bandlimit \(L\) to be \(J = \log_2L\), and the number of directions sampled is simply \(2N-1\). So, putting this together for the highest order anisotropic coefficients, and assuming a minimum wavelet scale \(J_\text{min} = 0\), the dimensionality is

So increasing the number of directions can inflate the size of the representation, however for almost all applications \(N\leq3\) is entirely sufficient (finer directional information is captured through the covariance between coarser directions). For isotropic coefficients the number of coefficients is given by

which is extremely compressed.

Import the package#

Lets first import S2SCAT and some basic packages.

[1]:

import sys

IN_COLAB = 'google.colab' in sys.modules

# Install a spherical plotting package.

!pip install cartopy &> /dev/null

# Install s2fft and data if running on google colab.

if IN_COLAB:

!pip install s2scat &> /dev/null

!pip install numpy==1.23.5 &> /dev/null

!mkdir data/

!wget https://github.com/astro-informatics/s2scat/raw/main/notebooks/data/target_map_lss.npy -P data/ &> /dev/null

[2]:

import jax

jax.config.update("jax_enable_x64", True)

from matplotlib import pyplot as plt

import numpy as np

import cartopy.crs as ccrs

import s2scat, s2fft

Configure the problem#

Lets set up the target field we are aiming to emulate, and the hyperparameters of the scattering covariance representation we will work with.

[3]:

L = 256 # Spherical harmonic bandlimit.

N = 3 # Azimuthal bandlimit (directionality).

J_min = 2 # Minimum wavelet scale.

reality = True # Input signal is real.

recursive = False # Use the fully precompute transform.

# Lets load in the spherical field we wish to model

x = np.load('data/target_map_lss.npy')

xlm = s2fft.forward_jax(x, L, reality=reality)[:,L-1:]

Let’s view the input map.

[4]:

plt.figure(figsize=(10,5))

ax = plt.axes(projection=ccrs.Mollweide())

im = ax.imshow(x, transform=ccrs.PlateCarree(), cmap='magma')

plt.axis('off')

plt.show()

Build the latent representation#

We have included an easy to use function which will return the latent encoder as a callable function. This is done by call the following function.

[5]:

encoder = s2scat.build_encoder(L, N, J_min, reality, recursive)

from which we can map either a single set harmonic coefficients \(x_{\ell m}\)

[6]:

covariances = encoder(xlm)

or a batch of images \(x^i_{\ell m}\) to their latent vectors. Note that \(i\) is a batch index which should be on axis 0!

[7]:

xlm_batched = jax.numpy.stack([xlm, xlm, xlm, xlm, xlm, xlm])

covariances_batched = encoder(xlm_batched)

So what is actually in this covariances Tuple? Essentially it is a list of:

covariances[0]: The field mean.

covariances[1]: The field variance.

covariances[2]: The mean power in each direction (\(S_1\)).

covariances[3]: The mean power in each wavelet scale (\(S_2\)).

covariances[4]: The fourth order covariance between two wavelet scales and two directions, defined as

covariances[5]: The sixth order covariance between three wavelet scales and three directions, defined as

[8]:

for i in range(6):

print("Number of coefficients for anisotropic covariance[{}]: {}".format(i, covariances[i].shape))

Number of coefficients for anisotropic covariance[0]: ()

Number of coefficients for anisotropic covariance[1]: ()

Number of coefficients for anisotropic covariance[2]: (35,)

Number of coefficients for anisotropic covariance[3]: (35,)

Number of coefficients for anisotropic covariance[4]: (525,)

Number of coefficients for anisotropic covariance[5]: (11375,)

Compute isotropic scattering covariances#

Instead, lets now compute the isotropic version of these statistics. To do this, we’ll just tweak our latent encoder

[9]:

encoder = s2scat.build_encoder(L, N, J_min, reality, recursive, isotropic=True)

and encode our data

[10]:

covariances = encoder(xlm)

These have much lower dimensionality that the anisotropic coefficients.

[11]:

for i in range(6):

print("Number of coefficients for isotropic covariance[{}]: {}".format(i, covariances[i].shape))

Number of coefficients for isotropic covariance[0]: ()

Number of coefficients for isotropic covariance[1]: ()

Number of coefficients for isotropic covariance[2]: (35,)

Number of coefficients for isotropic covariance[3]: (35,)

Number of coefficients for isotropic covariance[4]: (21,)

Number of coefficients for isotropic covariance[5]: (91,)

But they still encode significant non-Gaussian structural information!

What next?#

This compression may well be sufficient as it is for many applications. Nevertheless, one may wish to go even further and consider applying other compression approaches as an additional stage.

In any case there are a number of use cases for the scattering covariances that extend beyond compression, such as emulation, where the scattering coefficients are used for a statistical generative model. An overview for this is provided in another example notebook.