Basic Usage

In this interactive tutorial we demonstrate basic usage of harmonic, using emcee as the sampler.

[1]:

%%capture

# Install packages

%pip install harmonic emcee getdist

[1]:

%%capture

# Import modules

import numpy as np

import matplotlib.pyplot as plt

import harmonic as hm

from functools import partial

import emcee

import jax.numpy as jnp

Define Bayesian posterior function

Now we will need to define the log-posterior function of interest.

As a working example for this basic tutorial we consider a log-likelihood given a standard 2-dimensional Gaussian

where for simplicity we have taken the mean \(\mu=0\) and dropped scaling factors, and assume a trivial uniform prior over an infinite interval. Under such conditions the log-posterior is given by

[2]:

def ln_posterior(x, inv_cov):

"""Compute log_e of posterior of n dimensional multivariate Gaussian.

Args:

x: Position at which to evaluate posterior.

Returns:

double: Value of posterior at x.

"""

return -jnp.dot(x,jnp.dot(inv_cov,x))/2.0

Compute samples using emcee

We then sample from the posterior using an MCMC algorithm. While any MCMC approach can be used we sample using the emcee package.

First we will need to define and initialise some variables.

[3]:

# Define parameters for emcee sampling

ndim = 5 # Dimensions

nchains = 200 # total number of chains to compute

samples_per_chain = 5000 # number of samples per chain

nburn = 2000 # number of samples to discard as burn in

# Construct a trivial inverse covariance (identity matrix)

inv_cov = np.zeros((ndim,ndim))

diag_cov = np.ones(ndim)

np.fill_diagonal(inv_cov, diag_cov)

Now we need to run the sampler.

[4]:

# initialise random seed

np.random.seed(1)

# Set initial random position and state

pos = np.random.rand(ndim * nchains).reshape((nchains, ndim))

rstate = np.random.get_state()

# Instantiate and execute sampler

sampler = emcee.EnsembleSampler(nchains, ndim, ln_posterior, args=[inv_cov])

(pos, prob, state) = sampler.run_mcmc(pos, samples_per_chain, rstate0=rstate)

# Collect samples into contiguous numpy arrays (discarding burn in)

samples = np.ascontiguousarray(sampler.chain[:,nburn:,:])

lnprob = np.ascontiguousarray(sampler.lnprobability[:,nburn:])

Compute evidence using harmonic

harmonic requires only posterior samples. There are no constraints on the type of sampling algorithm used.

Once we have posterior samples to hand, they can be post-processed using harmonic to compute the Bayesian evidence.

Collating samples using harmonic.chains class

We first configure the chains into a harmonic-friendly shape, which we do as follows.

[5]:

# Instantiate harmonic's chains class

chains = hm.Chains(ndim)

chains.add_chains_3d(samples, lnprob)

Since we will subsequently learn the target distribution \(\varphi\) we split the samples into training and inference sets (we often use the common machine learning terminology “test” for the inference data-set).

[6]:

# Split the chains into the ones which will be used to train the machine

# learning model and for inference

chains_train, chains_infer = hm.utils.split_data(chains, training_proportion=0.5)

Train the machine learning model

We simply select the model we wish to adopt and fit the model.

[7]:

# Select RealNVP Model

n_scaled_layers = 2

n_unscaled_layers = 4

temperature = 0.8

model = hm.model.RealNVPModel(ndim, standardize=True, temperature=temperature)

epochs_num = 20

# Train model

model.fit(chains_train.samples, epochs=epochs_num, verbose= True)

Training NF, current loss: 6.947: 100%|██████████| 20/20 [01:51<00:00, 5.59s/it]

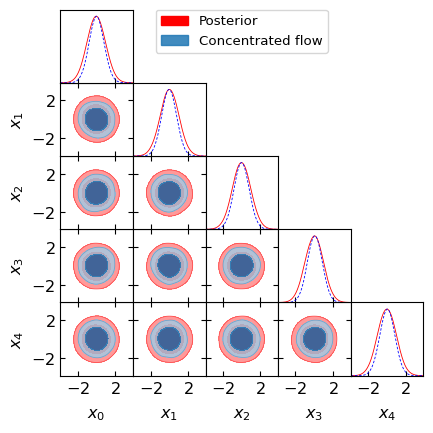

Posterior triangle plot

Let’s also plot slices of the posterior using these samples to see what we’re working with! It is important for the concentrated flow (here we set temperature equal to 0.8) to be contained within the posterior. If this is not the case, the evidence estimate will not be accurate. If the flow is not contained within the posterior, try reducing the temperature or learning the posterior better by training for longer or changing the model architecture or hyperparameters.

[8]:

samples = samples.reshape((-1, ndim))

samp_num = samples.shape[0]

flow_samples = model.sample(samp_num)

hm.utils.plot_getdist_compare(samples, flow_samples)

Compute the Bayesian evidence

Finally we simply compute the learnt harmonic mean estimator as follows.

[9]:

# Instantiate harmonic's evidence class

ev = hm.Evidence(chains_infer.nchains, model)

# Pass the evidence class the inference chains and compute the evidence!

ev.add_chains(chains_infer)

ln_inv_evidence = ev.ln_evidence_inv

err_ln_inv_evidence = ev.compute_ln_inv_evidence_errors()

Results

Let’s check the evidence value computed.

Analytic value

As this is a standard 2-dimensional Gaussian the evidence is analytically tractable and given by

[10]:

def ln_analytic_evidence(ndim, cov):

"""Compute analytic evidence for nD Gaussian.

Args:

ndim: Dimension of Gaussian.

cov: Covariance matrix.

Returns:

double: Analytic evidence.

"""

ln_norm_lik = 0.5*ndim*np.log(2*np.pi) + 0.5*np.log(np.linalg.det(cov))

return ln_norm_lik

ln_inv_evidence_analytic = -ln_analytic_evidence(ndim, inv_cov)

Let’s compare the value computed by harmonic and analytically

[11]:

print('ln inverse evidence (harmonic) = {} +/- {}'.format(ln_inv_evidence, err_ln_inv_evidence))

print('ln inverse evidence (analytic) = {}'.format(ln_inv_evidence_analytic))

print('nsigma = {}'.format(np.abs(ln_inv_evidence - ln_inv_evidence_analytic) / err_ln_inv_evidence))

ln inverse evidence (harmonic) = -4.595915794372559 +/- (-0.003410517655520678, 0.0033989255487287665)

ln inverse evidence (analytic) = -4.594692666023363

nsigma = [-0.35863422 0.35985735]

As expected, the evidence computed by harmonic is close to that computed analytically.